Hello and good morning,

I wondered if some one could sanity check an issue I am having with distributed polling please.

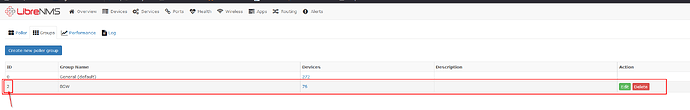

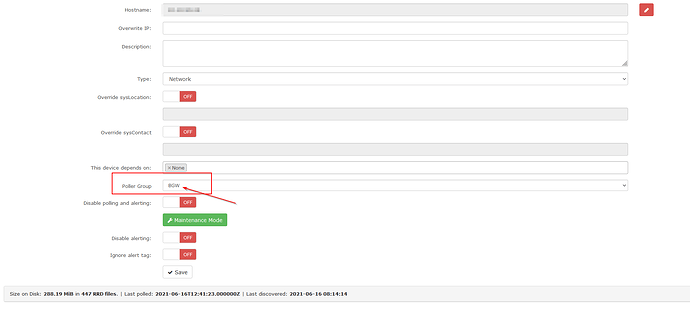

I have a environment with 1 Webserver/RRDCached/Memcached and base librenms poller, 1 SQL and 1 Additional poller. The issue i am having is seeing the second poller come up in the poller list.

My base librenms .env file is;

APP_KEY=base64:a_key

DB_HOST=a_db_server

DB_DATABASE=“a_db”

DB_USERNAME=“a_user”

DB_PASSWORD=“a_password”

APP_URL=/

NODE_ID=5d22fc06e1fa6

LIBRENMS_USER=librenms

REDIS_HOST=localhost

REDIS_DB=0

REDIS_PASSWORD=“a_password”

REDIS_PORT=6379

My poller .env file is

APP_KEY=base64:a_key

DB_HOST=a_db_server

DB_DATABASE=“a_db”

DB_USERNAME=“a_user”

DB_PASSWORD=“a_password”

APP_URL=/

NODE_ID=5d22fc06e1fa6

LIBRENMS_USER=librenms

REDIS_HOST=localhost

REDIS_DB=0

REDIS_PASSWORD=“a_password”

REDIS_PORT=6379

My base config.php is

Database config

$config[‘db_host’] = ‘x.x.x.x’;

$config[‘db_user’] = ‘librenms’;

$config[‘db_pass’] = ‘a_password’;

$config[‘db_name’] = ‘librenms’;

$config[‘distributed_poller’] = true;

$config[‘distributed_poller_group’] = ‘0’;

$config[‘memcached’][‘enable’] = true;

$config[‘memcached’][‘host’] = ‘x.x.x.x’;

$config[‘memcached’][‘port’] = 11211;

$config[‘service_poller_workers’] = 50; # Processes spawned for polling

$config[‘service_services_workers’] = 3; # Processes spawned for service polling

$config[‘service_discovery_workers’] = 5; # Processes spawned for discovery

$config[‘os’][‘junos’][‘nobulk’] = false;

$config[‘snmp’][‘max_repeaters’] = 50;

//Optional Settings

$config[‘service_poller_frequency’] = 400; # Seconds between polling attempts

$config[‘service_discovery_frequency’] = 21600; # Seconds between discovery runs

$config[‘service_poller_down_retry’] = 60; # Seconds between failed polling attempts

$config[‘service_loglevel’] = ‘CRITICAL’; # Must be one of ‘DEBUG’, ‘INFO’, ‘WARNING’, ‘ERROR’, ‘CRITICAL’

$config[‘service_ping_enabled’] = true;

My poller config.php is

$config[‘distributed_poller_name’] = php_uname(‘n’);

$config[‘distributed_poller_group’] = ‘0’;

$config[‘distributed_poller_memcached_host’] = “x.x.x.x”;

$config[‘distributed_poller_memcached_port’] = 11211;

$config[‘distributed_poller’] = true;

$config[‘rrdcached’] = “x.x.x.x:42217”;

$config[‘update’] = 0;

I do not see both pollers showing in the list. What am I doing wrong?