In the constant effort to keep your alert to noise ratio workable, here’s a way to alert syslog entries but only care if there is more than some number of messages in the time window. As a comparison in the graylog world, this can be done with grace periods.

Use case examples:

- login failures - one error may be OK, but several in a few minutes may need more investigation

- MAC flaps - transient wireless roaming > 2 entries may be a bigger issue, else ignore

This topic has come up a few times and it’s always eluded me, so I’ve tired again and here it is. There will be a more efficient way to avoid having to run the query twice, but I can’t find workable shortcut as yet, so this will have to do as a starting point - feel free to improve it!

EDIT the journey of hitting limitations and solving them is in subsequent comments, and added to the end of this first post to leave some of the context/attempts to help understand why it’s as complicated as it is.

Standard Query Explanation

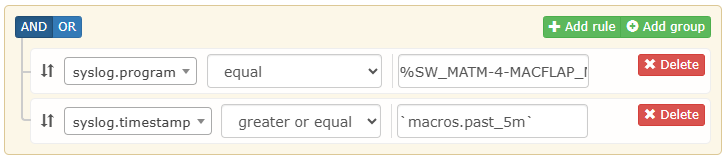

Assuming you have an alert rule built via the UI such as:

The Advanced tab will show the SQL query as:

SELECT * FROM devices,syslog WHERE (devices.device_id = ? AND devices.device_id = syslog.device_id) AND syslog.program = "%SW_MATM-4-MACFLAP_NOTIF" AND syslog.timestamp >= (DATE_SUB(NOW(),INTERVAL 5 MINUTE))

Reformatting this for clarity:

SELECT *

FROM

devices,syslog

/*-- ALERT CONDITIONS---*/

WHERE

(devices.device_id = ? AND devices.device_id = syslog.device_id)

AND syslog.program = "%SW_MATM-4-MACFLAP_NOTIF"

AND syslog.timestamp >= (DATE_SUB(NOW(),INTERVAL 5 MINUTE));

/*----------------------*/

This query will return multiple rows if they exist. If we replace the ? with a valid device ID we can test this query on the database CLI.

The ALERT CONDITIONS comment block marks the relevant parts we need to duplicate in the final query.

Counting results as condition

If we only want to report if there is more than one row returned - we can use the alert conditions in a subselect, check the return count, and use this determination as a condition on the main query to suppress output.

The general format is:

SELECT *

FROM devices,syslog

<verbatim alert conditions>

AND (

SELECT count(*)

FROM devices,syslog

<verbatim alert conditions>

GROUP BY devices.device_id

HAVING count(*) > 1

) IS NOT NULL

In the bottom subquery, we are only outputting a result if there are more results than our condition HAVING count(*) > 1. If there is less than or equal to 1, the result is NULL and the main query WHERE clause conditions are not met, meaning no output.

Using the existing example, the full query would be:

SELECT *

FROM devices,syslog

/*-- ALERT CONDITIONS---*/

WHERE

(devices.device_id = ? AND devices.device_id = syslog.device_id)

AND syslog.program = "%SW_MATM-4-MACFLAP_NOTIF"

AND syslog.timestamp >= (DATE_SUB(NOW(),INTERVAL 5 MINUTE))

/*----------------------*/

AND (

SELECT count(*)

FROM devices,syslog

/*-- ALERT CONDITIONS---*/

WHERE

(devices.device_id = ? AND devices.device_id = syslog.device_id)

AND syslog.program = "%SW_MATM-4-MACFLAP_NOTIF"

AND syslog.timestamp >= (DATE_SUB(NOW(),INTERVAL 5 MINUTE))

/*----------------------*/

GROUP BY devices.device_id

HAVING count(*) > 1

) IS NOT NULL;

If we replace the ? with a valid device ID we can test this query on the database CLI.

To test fully, increase the INTERVAL to capture more results, then change the HAVING > x number to match the number of rows returned to ensure the query suppresses output.

From this we can see how we can count results, make a decision, and then output the results.

The catch now is this doesn’t work as a custom alert query, but you now understand how it works!

Making the query work as an alert

The issue now is there are 2 placeholders/parameters ? and the alert code only sends one - this results in an error SQLSTATE[HY093]: Invalid parameter number.

There are various ways around this, but the easier one doesn’t work in MariaDB, and the consequence of that is we need to name each and every column we want (see Alert only when syslog message received multiple times - #4 by rhinoau)

The Final Query

SELECT *

FROM (

SELECT

d.device_id AS dev_id, -- avoid column name clash

d.inserted,d.hostname,d.sysName,d.display,d.ip,d.overwrite_ip,d.community,

d.authlevel,d.authname,d.authpass,d.authalgo,d.cryptopass,d.cryptoalgo,d.snmpver,

d.port,d.transport,d.timeout,d.retries,d.snmp_disable,d.bgpLocalAs,d.sysObjectID,

d.sysDescr,d.sysContact,d.version,d.hardware,d.features,d.location_id,d.os,d.status,

d.status_reason,d.ignore,d.disabled,d.uptime,d.agent_uptime,d.last_polled,

d.last_poll_attempted,d.last_polled_timetaken,d.last_discovered_timetaken,

d.last_discovered,d.last_ping,d.last_ping_timetaken,d.purpose,d.type,d.serial,

d.icon,d.poller_group,d.override_sysLocation,d.notes,d.port_association_mode,

d.max_depth,d.disable_notify,d.ignore_status,

s.facility,s.priority,s.level,s.tag,s.timestamp,

s.program,s.msg,s.seq

FROM devices d

JOIN syslog s ON d.device_id = s.device_id

WHERE d.device_id = ?

/*-- ALERT CONDITIONS---*/

AND s.program = "%SW_MATM-4-MACFLAP_NOTIF"

AND s.timestamp >= (DATE_SUB(NOW(), INTERVAL 5 MINUTE))

/*----------------------*/

) AS logs

WHERE (

SELECT COUNT(*)

FROM syslog s

WHERE s.device_id = logs.dev_id

/*-- ALERT CONDITIONS---*/

AND s.program = "%SW_MATM-4-MACFLAP_NOTIF"

AND s.timestamp >= (DATE_SUB(NOW(), INTERVAL 5 MINUTE))

/*----------------------*/

) > 1;

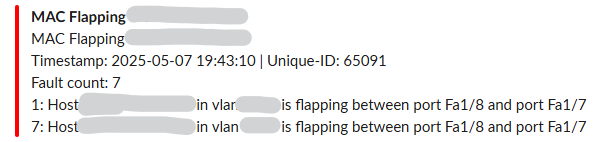

Alert template ideas

If the results going to your transports are too verbose, another way to improve readability may be to filter the output in the alert template:

@if ($alert->faults)

Fault count: {{ count($alert->faults) }}

@foreach ($alert->faults as $key => $value)

@if ($loop->first)

{{ $key }}: {{ $value['msg'] }}

@endif

@if ($loop->last)

{{ $key }}: {{ $value['msg'] }}

@endif

@endforeach

@endif

This will print the first and last log entry from the set of results, and put the count at the top to give some context - in slack, it looks like this: