Hi All,

TL;DR interrupted comms during polling/discovery causes strange sensor issues (and interface re-enumeration in some cases - links below), is this something I can tune around, or a fundamental issue we should dig more in to for such situations?

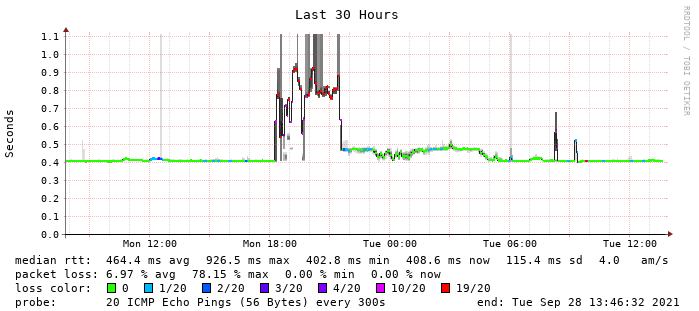

I have another strange one being caused by interrupted comms at a remote site - congestion and 50%+ packet loss the main issue for a few hours due to redundant comms failure and flapping.

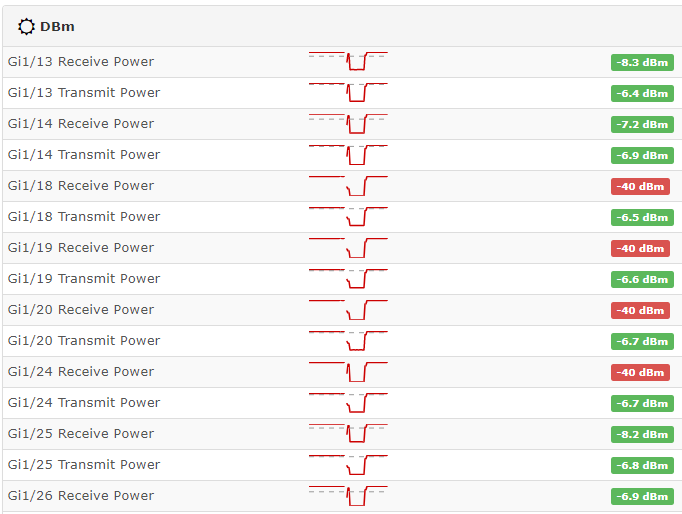

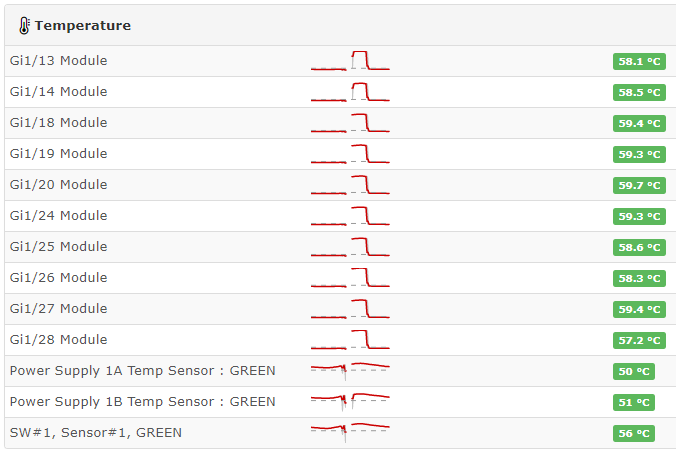

The result was various Cisco IE-4010 switches which did this to all their sensors:

etc. on all sensors, a close up example:

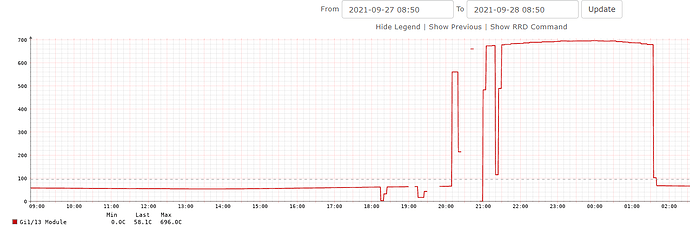

I checked that switch, and it definitely wasn’t in a furnace at that time, and it all recovered again once a discovery run was successful on stabilised comms.

The outage looked like this from a device further upstream:

In this device example, the discovery at 20:44 will be the one that broke it during the outage, and then the one at 1:43 fixed it:

~$ grep 'y.php 65' logs/librenms.log

/opt/librenms/discovery.php 65 2021-09-27 07:42:43 - 1 devices discovered in 303.9 secs

/opt/librenms/discovery.php 65 2021-09-27 13:22:35 - 1 devices discovered in 319.1 secs

/opt/librenms/discovery.php 65 2021-09-27 20:44:53 - 1 devices discovered in 2429. secs

/opt/librenms/discovery.php 65 2021-09-28 01:43:48 - 1 devices discovered in 335.8 secs

/opt/librenms/discovery.php 65 2021-09-28 07:42:58 - 1 devices discovered in 311.7 secs

/opt/librenms/discovery.php 65 2021-09-28 13:44:39 - 1 devices discovered in 329.9 secs

This heads back to some other issues I’ve logged on the consequence of these sorts of patchy comms, the first being the worst as it destroyed historical data, and changed all my port ID so I had to fix several hundreds of link entries manually in various weathermaps:

- Device port IDs changed after recovery from site outage

- Debugging graph spikes from high latency links - #9 by rhinoau

I lost the copy of the polling output from the time, but a debug I ran from the UI really had 700 degrees etc. in the output, so I’m at a loss to determine how it managed to get those values for real, but the sensor IDs etc. have not changed and no historical data is lost.

Does anyone have a clue as to the sequence of events that might cause this internally?

Cheers!

====================================

Component | Version

--------- | -------

LibreNMS | 21.9.0

DB Schema | 2021_25_01_0129_isis_adjacencies_nullable (217)

PHP | 7.3.30-1+ubuntu18.04.1+deb.sury.org+1

Python | 3.6.9

MySQL | 10.5.12-MariaDB-1:10.5.12+maria~bionic

RRDTool | 1.7.0

SNMP | NET-SNMP 5.7.3

====================================

[OK] Composer Version: 2.1.8

[OK] Dependencies up-to-date.

[OK] Database connection successful

[OK] Database schema correct