Today I have the opportunity to do Distributed Polling because the current machine is not able to finish polling in 300 seconds. (I have 7000+ devices)

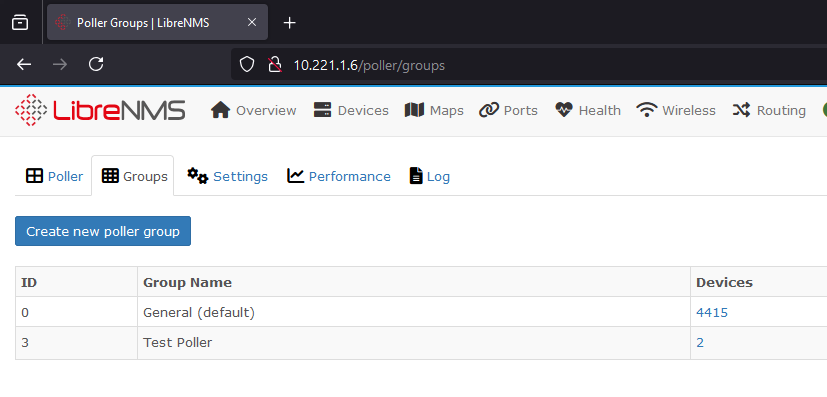

After setting everything, I have created a separate group for pollers.

The problem I have is:

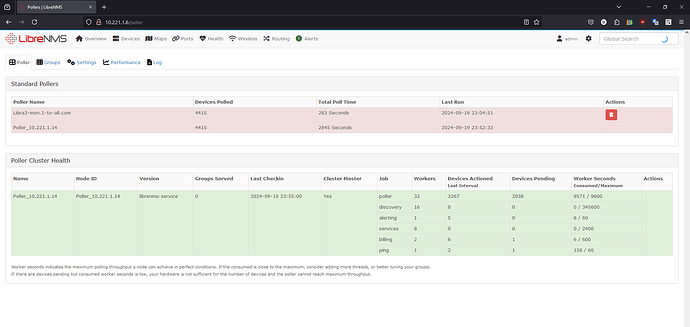

Machine 1 - does not continue poller group 1.

Machine 2 - polls group 1 instead, not going to group 2.

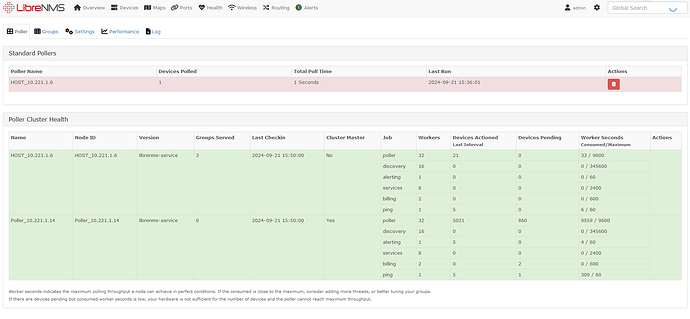

And now I accidentally deleted the poller of machine 1 because it has a trash can icon.

With the understanding that it can be added again, I still can’t find a way to add it back.

Is there a way to recover the poller of machine 1 and have each poller group separately?

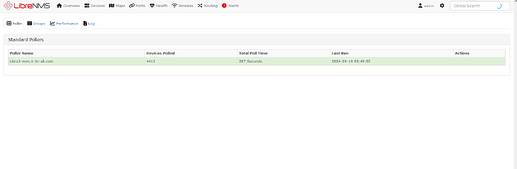

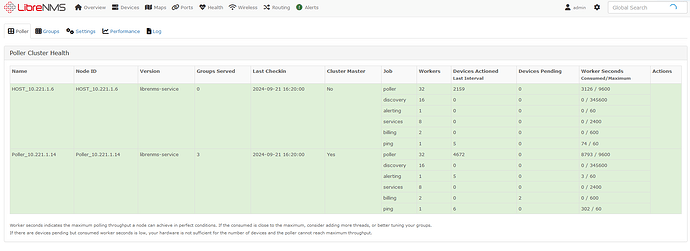

This is a picture of my poller page.

This is the information of the first machine.

[librenms@zabbix-mon ~]$ ./validate.php

===========================================

Component | Version

--------- | -------

LibreNMS | 24.8.0-75-g2fc59a470 (2024-09-18T02:54:34+07:00)

DB Schema | 2024_08_27_182000_ports_statistics_table_rev_length (299)

PHP | 8.2.13

Python | 3.9.18

Database | MariaDB 10.5.22-MariaDB

RRDTool | 1.7.2

SNMP | 5.9.1

===========================================

[OK] Composer Version: 2.7.9

[OK] Dependencies up-to-date.

[OK] Database connection successful

[OK] Database connection successful

[OK] Database Schema is current

[OK] SQL Server meets minimum requirements

[OK] lower_case_table_names is enabled

[OK] MySQL engine is optimal

[OK] Database and column collations are correct

[OK] Database schema correct

[OK] MySQL and PHP time match

[OK] Distributed Polling setting is enabled globally

[OK] Connected to rrdcached

[OK] Active pollers found

[OK] Dispatcher Service not detected

[OK] Locks are functional

[OK] Python poller wrapper is polling

[OK] Redis is functional

[OK] rrdtool version ok

[OK] Connected to rrdcached

[FAIL] We have found some files that are owned by a different user than 'librenms', this will stop you updating automatically and / or rrd files being updated causing graphs to fail.

[FIX]:

sudo chown -R librenms:librenms /opt/librenms

sudo setfacl -d -m g::rwx /opt/librenms/rrd /opt/librenms/logs /opt/librenms/bootstrap/cache/ /opt/librenms/storage/

sudo chmod -R ug=rwX /opt/librenms/rrd /opt/librenms/logs /opt/librenms/bootstrap/cache/ /opt/librenms/storage/

Files:

/opt/librenms/cache/os_defs.cache

This is the information of the second machine.

[librenms@Libra3-mon ~]$ ./validate.php

===========================================

Component | Version

--------- | -------

LibreNMS | 24.8.0-75-g2fc59a470 (2024-09-18T02:54:34+07:00)

DB Schema | 2024_08_27_182000_ports_statistics_table_rev_length (299)

PHP | 8.1.27

Python | 3.9.19

Database | MariaDB 10.5.22-MariaDB

RRDTool | 1.7.2

SNMP | 5.9.1

===========================================

[OK] Composer Version: 2.7.9

[OK] Dependencies up-to-date.

[OK] Database connection successful

[OK] Database connection successful

[OK] Database Schema is current

[OK] SQL Server meets minimum requirements

[OK] lower_case_table_names is enabled

[OK] MySQL engine is optimal

[OK] Database and column collations are correct

[OK] Database schema correct

[OK] MySQL and PHP time match

[OK] Distributed Polling setting is enabled globally

[OK] Connected to rrdcached

[OK] Active pollers found

[OK] Dispatcher Service not detected

[OK] Locks are functional

[OK] Python poller wrapper is polling

[OK] Redis is functional

[OK] rrdtool version ok

[OK] Connected to rrdcached