Thank you again @snmpd, so so much!

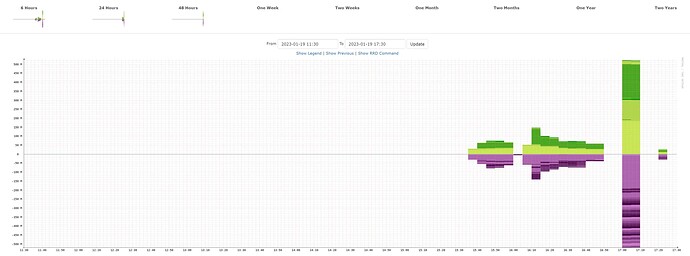

I have been looking at this again this afternoon, I’m starting to notice a lot of RRD: [0/0.00s] in the outputs, especially if run from these options in the GUI.

./discovery.php host1 2023-01-16 17:40:09 - 1 devices discovered in 153.2 secs

SNMP [164/111.71s]: Get[107/12.39s] Getnext[1/0.11s] Walk[56/99.21s]

MySQL [5530/9.83s]: Cell[437/10.34s] Row[2146/-5.67s] Rows[95/0.31s] Column[32/0.06s] Update[2755/4.36s] Insert[57/0.31s] Delete[8/0.11s]

RRD [0/0.00s]:

./poller.php host1 2023-01-16 17:46:53 - 1 devices polled in 293.8 secs

SNMP [47/283.06s]: Get[16/2.00s] Getnext[4/0.40s] Walk[27/280.66s]

MySQL [1170/4.66s]: Cell[14/0.04s] Row[-13/-0.03s] Rows[28/0.14s] Column[1/0.00s] Update[1138/4.51s] Insert[2/0.01s] Delete[0/0.00s]

RRD [549/0.28s]: Update[547/0.28s] Create[2/0.00s]

I am starting to wonder if rrdcached is working, I have it in a docker, which is running and healthy, I’m unsure how to check the service within the docker as the docker says systemctl not recognised. My thoughts here are is rrdcached polling correctly? Could it be linked to daily.sh?

The last thing I did to this VM was growing the HD size as the existing files had put disk space to 0%. You may also be right about incorrect permissions but having checked /opt/librenms I’m unsure what other folders could be incorrect. I’ve got about 2 years of history so trying to avoid a reinstall.

user@srv1:~$ docker ps | grep libre

cfd55543a8e1 adolfintel/speedtest "docker-php-entrypoi…" 15 months ago Up 3 weeks 0.0.0.0:80->80/tcp, :::80->80/tcp librespeed

db210a218757 librenms/librenms:latest "/init" 16 months ago Up 3 weeks 514/tcp, 514/udp, 0.0.0.0:8000->8000/tcp, :::8000->8000/tcp nms_librenms

59f7d62c9ab9 librenms/librenms:latest "/init" 2 years ago Up 3 weeks 514/tcp, 8000/tcp, 514/udp nms_dispatcher

user@srv1:~$ docker ps | grep rrd

3e1bf3250c15 crazymax/rrdcached "/init" 2 years ago Up 3 weeks (healthy) 42217/tcp nms_rrdcached

user@srv1:~$ sudo -s

[sudo] password for user:

user@srv1:/home/user# docker exec -it nms_librenms bash

bash-5.0# pwd

/opt/librenms

Running the scripts again as root (not as the user as I was before):

(if there’s something more specific from the entire output I’m happy to share the full output, trimming to try and help)

./discovery.php host1 2023-01-16 17:53:54 - 1 devices discovered in 199.9 secs

SNMP [162/152.14s]: Get[106/12.70s] Getnext[1/0.11s] Walk[55/139.34s]

MySQL [5556/10.51s]: Cell[437/9.85s] Row[2166/-5.03s] Rows[95/0.30s] Column[32/0.05s] Update[2755/4.93s] Insert[65/0.34s] Delete[6/0.07s]

./poller.php host1 2023-01-16 17:59:21 - 1 devices polled in 287.6 secs

SNMP [48/277.41s]: Get[17/2.28s] Getnext[4/0.41s] Walk[27/274.72s]

MySQL [1163/4.41s]: Cell[14/0.03s] Row[-13/-0.03s] Rows[28/0.14s] Column[1/0.00s] Update[1131/4.26s] Insert[2/0.01s] Delete[0/0.00s]

RRD [548/0.29s]: Update[548/0.29s]