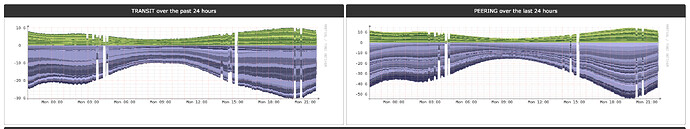

I don’t know if I am on the right path to trouble shooting the issue that I have been seeing with intermittent drops / gaps in my TRANSIT & PEERING graphs… But every few hours I see the following:

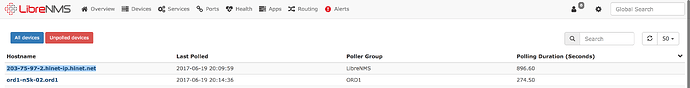

When this happens I have been able to track it down to a device or two that have higher than 300 millisecond response times.

I cannot say for sure that this is memcache related or not… I am only guessing because of the device locks that should be associated with memcache. I checked librenms.log and the only entry that isn’t device related around the time of the drops is:

MySQL Error: You have an error in your SQL syntax; check the manual that corresponds to your MariaDB server version for the right syntax to use near ‘)’ at line 1 (DELETE FROM pdb_ix WHERE pdb_ix_id NOT IN ())

I know that there are multiple deployments of LibreNMS using distributed polling so I am hoping that I have something misconfigured…

Thank you.

-Patrick

You need to look at the individual device polling graphs to see what modules are taking the longest and then you can make a decision about how to handle that.

Post the poller graphs here if you want feedback.

I have looked at the polling graphs and the high polls times are due to the # of ports being polled. We have several Cisco Nexus devices with fabric extenders so having a device with 400 - 450+ ports is not unheard of. I working on splitting apart services to try and keep the poll times under 300 ms… But my concern is what happens when a device goes over 300 ms. The ripple effect it has on the rest of the devices polled is what is concerning. When the poller service matures and the bugs are worked out, will this be a better way to go than poller-wrapper and memcached…??

Thank you.

-Patrick

Have you followed the performance docs?

Followed them word for word… and the one thing that I see every time a device goes over 300 seconds poll duration, the issue starts showing up in the graphs.

Would this issue be resolved when poller-service is bug free since it does a device lock at the DB layer…?? Or is the same issue as it locks a set of devices and does not unlock them until the last device is finished polling…??

Poller-service has other things which help with this but locking part isn’t one of them

Are all the 400-450 ports in use?

A large majority of the ports are in use. Do you recommend me trying out Per port polling…??

That depends really, you may as well try it and see. If it doesn’t work you will need to either disable a lot of ports you don’t care about or just turn off ports module.