Hi All.

I follow monthly updates, and the last one was last week.

Today I have noticed that bunch of Arista switches are polled the way too slow and snmpd starves the CPU on them.

#bash top

top - 09:54:09 up 313 days, 6:35, 2 users, load average: 0.81, 0.99, 1.33

Tasks: 331 total, 2 running, 329 sleeping, 0 stopped, 0 zombie

%Cpu(s): 29.7 us, 1.2 sy, 0.0 ni, 67.5 id, 0.0 wa, 0.6 hi, 0.9 si, 0.0 st

KiB Mem: 32561636 total, 8648684 used, 23912952 free, 404480 buffers

KiB Swap: 0 total, 0 used, 0 free, 3427984 cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

4101 root 20 0 979m 972m 5888 R 99.2 3.1 6474:19 snmpd

I see timeouts and slow response for bgp-peer module:

>> Runtime for poller module 'bgp-peers': 388.0714 seconds with 269376 bytes

>> SNMP: [104/387.35s] MySQL: [291/0.52s] RRD: [406/0.10s]

#### Unload poller module bgp-peers ####

it looks like that

SNMP['/usr/bin/snmpget' '-v2c' '-c' 'COMMUNITY' '-OQUs' '-m' 'ARISTA-BGP4V2-MIB' '-M' '/opt/librenms/mibs:/opt/librenms/mibs/arista' 'udp:HOSTNAME:161' 'aristaBgp4V2PeerState.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerAdminStatus.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerInUpdates.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerOutUpdates.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerInTotalMessages.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerOutTotalMessages.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerFsmEstablishedTime.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerInUpdatesElapsedTime.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerLocalAddr.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerLastErrorCodeReceived.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerLastErrorSubCodeReceived.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4' 'aristaBgp4V2PeerLastErrorReceivedText.1.2.16.32.1.13.245.184.0.187.0.0.0.0.3.41.52.0.4']

Timeout: No Response from udp:device:161.```

@murrant Hi Tony,

please take a look. seems like a bug introduced in 1.63 release.

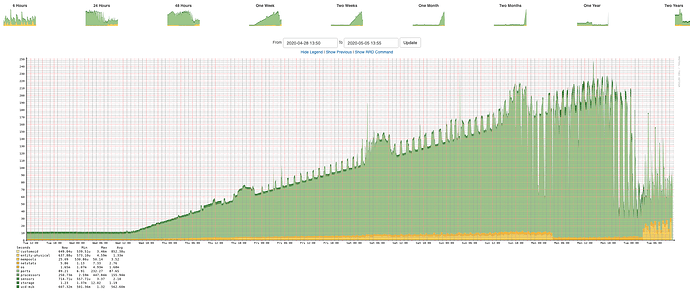

It perfectly correlates with running the update:

-rw-rw-r--. 1 librenms librenms 371503 Apr 29 12:04 daily.log-20200430

Also, that very much looks like a leak of some type on the device. Have you tried updating the firmware/contacting the vendor?

We have indeed, no news so far. But different platforms, SW affected.

7020SR

7280SR

7280QR

And EOS:

4.21.6F

4.21.5F

4.21.8M

4.21.3F

4.22.0F

What we have also tried is to downgrade one poller to 1.62 and it doesn’t help ((

Hmm this should not cause any load issue (the change in polling code is quite small and nothing intensive)

On my side I didn’t had any negative impact on our Arista platforms

- 7280SRA (4.19.5M)

- 7280SR (4.20.8M )

- 7508 (4.20.8M)

- 7508N (4.20.8M)

Do you have the same spikes when you disable bgp-peers module ?

If you downgraded the poller from before the BGP error code management then it’s probably something else.

Have you tried restarting the snmpd process ?

Yep, seems downgrade and restart snmpd on switches did the trick.

So should be a bug in EOS 4.21.x - 4.22.0

Update…

nope. it returns after a while

Would be great if you could narrow down a little more what is causing the issue.

Clearly it is a firmware bug, but maybe LibreNMS could work around it.

We just this week upgraded a large number of our Arista devices from 4.21.8M to 4.22.5M (version recommended to us by Arista support). We track librenms master manually and just updated last week, including the BGP polling updates in PR 11424.

I’m not seeing a change in the polling times on our 7280SRs. Everything is pretty steady before and after the librenms update and the Arista update.

My read of the attached graph gave me the impression the ports module was the one ramping up in execute time, but that could be an effect of the CPU drag vs the size of the SNMP port table.

seems like a memory leak:

affected device

#sh proc top once | i nmp

3868 root 20 0 1429m 1.4g 5624 S 0.0 4.5 6871:28 snmpd

27637 root 20 0 787m 396m 201m S 0.0 1.2 1:18.88 Snmp

not affected device

#sh proc top once | i nmp

2954 root 20 0 731m 368m 196m S 0.0 1.2 215:54.55 Snmp

3851 root 20 0 16384 9500 6048 S 0.0 0.0 41:55.50 snmpd

disabling bgp peers and restarting both processes work. at least devices workarounded yesterday look stable, no CPU spikes and memory consumed by snmp doesn’t raise.

Arista has confirmed a bug. It is reproduced if SNMP MSS configured for a relatively small value (< 1500b in our case) and big amount of SNMP data is being transmitted (e.g. BGP module in place).

The fix for BUG484976 will be made available in the next upcoming maintenance releases in the 4.21/4.22 code train.