Hey, thanks for the response. To address your questions:

- The jobs are NOT overlapping with the old service (librenms-poller-service.service). There will never be one than more instance poller.php (running ‘snmpbulkwalk’) process for a particular device when using it.

I did watch the behavior of the old service for the ‘problem device’ (consistently taking more than 60) seconds to poll, and it simply lets the polling finish whenever it finishes, then starts another job run - which basically makes it to be in perpetual polling state (which is OK), but with NO overlap at any point in time.

OLD SERVICE:

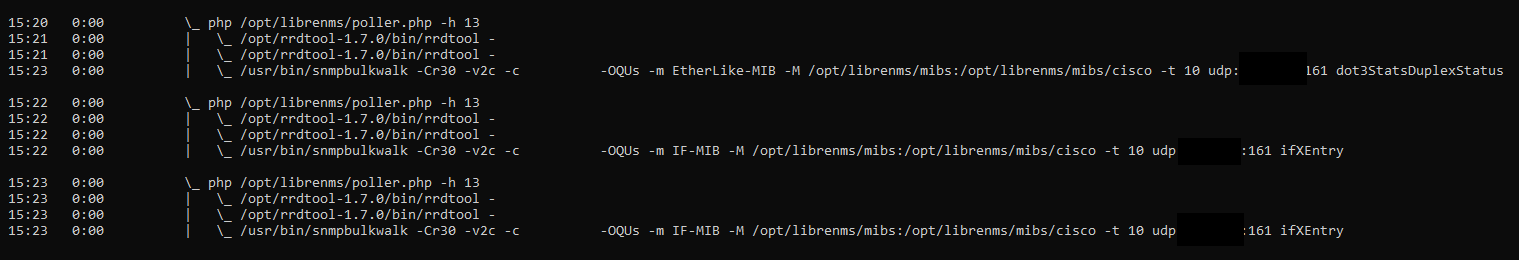

With the new service (librenms.service) jobs ARE overlapping. After 60 seconds (my global polling interval), another instance of the poller.php is spawned, ignoring the fact that one is still finishing the run:

NEW SERVICE going crazy and spawning parallel jobs:

This eventually spirals out of control, as more and more concurrent jobs are spawned (I counted 9 of them at some point…), DoSsin the device as a result.

So definitively the core of the issues is that the new service has got some problem with locking (which is supposed to be preventing double polling?) - which is somehow is working and locking just fine when using old service.

- I must say I am very confused in terms of how RRD tool works exactly, it seem a bit arcane magic… Their docs do state however: “Note, that if you do NOT supply new data points exactly every 300 [60 in my case] seconds, this is not a problem, RRDtool will interpolate the data accordingly.” (whatever that means)

Regardless, I am constantly missing one or two poll intervals (60 seconds in my case) for some of the devices and not really getting any gaps in the graphs, as shown in my previous message - and there is definitively NO overlap. I think I am as equally puzzled about it as you :). All my RRDs are configured with 60 seconds step.

- It definitively look like spawning of overlapping jobs by the NEW service is the root cause here - would it be possible implement more strict locking there, akin to the one done by old service?